Outcomes

Project opportunity

Out of the 50+ submissions, were one of the top eight teams selected to present our work at ISRO and NASA’s Johnson Space Center. Our work was described as “one of the most practical, and research driven designs I have ever seen from students; (there are) a lot of interesting elements that we at NASA will seriously take forward.”

The main goal for NASA SUITS is creating an innovative spacesuit user interface leveraging AR. The UI must be developed on an AR platform like the Microsoft HoloLens and must demonstrate how AR can be used in a crew member's display during EVA (extra-vehicular activity) operations on the lunar surface in a non-obtrusive way. The four main kinds of extra vehicular activities that astronauts will perform on the lunar surface that must have AR support are:

egress

rover commanding

navigation

messaging

geosampling

Team structure

Timeline

Design process

My role

I was a UX designer working primarily with the entire 10 member UX department. In further phases of design, I was the Product Manager and UX design lead on a 6 membered agile sub-team, composed as shown above.

I was involved in all stages of research and main state design, but was particularly responsible for the geosampling component.

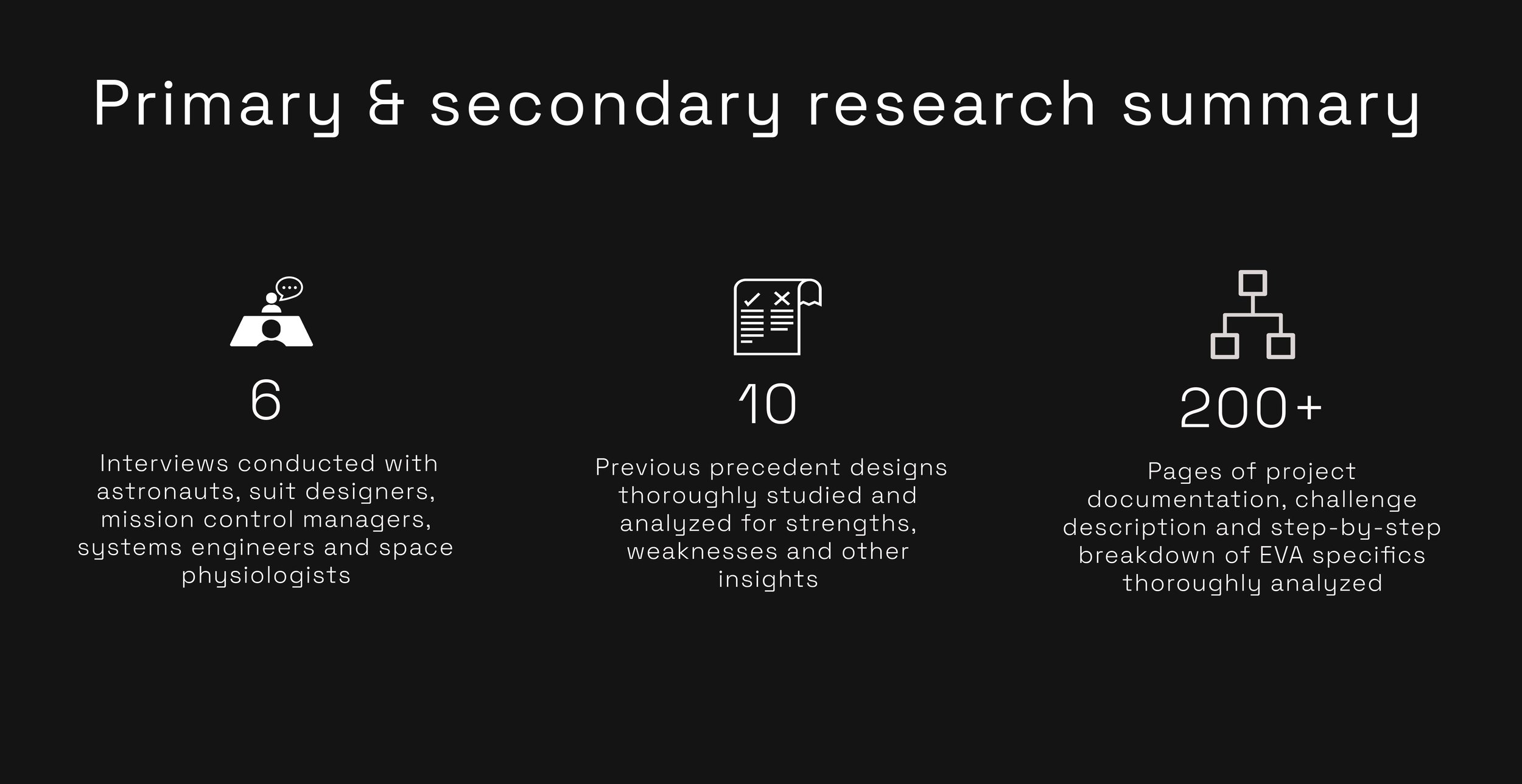

What is the experience of being in space?

One of the most important lessons you learn as a designer is that ‘I am not the user’; and this has never been more true! As a team, none of us has had even a remotely similar experience to being in space. We didn’t know of any ‘common trends’ in spacesuit design like we would for a mobile application, or ‘core functionalities’ in astronaut gear - in short, we were completely in the blind and realized we had a lot of vital research to do, starting from the basics of astronaut training.

We had to understand how fundamental actions like holding a piece of equipment is like in space, how vision is impacted by dim lighting, the various kinds of information need during different processes of an EVA, communication protocols between astronauts…and the list goes on. So what did we do?

We interviewed real astronauts.

As a team, we contacted who we knew were involved with NASA. We had wonderful meetings with them and requested if they could put us in touch with some of their other colleagues. All in all, we were able to interview 6 people from different NASA postings, thus covering all aspects of a space mission - from Mission Control, Space Technicians, Suit Designers and of course, Astronauts. As the UX department, we split up into mini teams of 2 each to interview the individuals so that we could all have the exciting opportunity to interact with a NASA mission crew member. I personally interviewed an astronaut and a suit designer!

After each interview, we held long collective discussions to identify the main insights from each conversation and note down clarifications and followups.

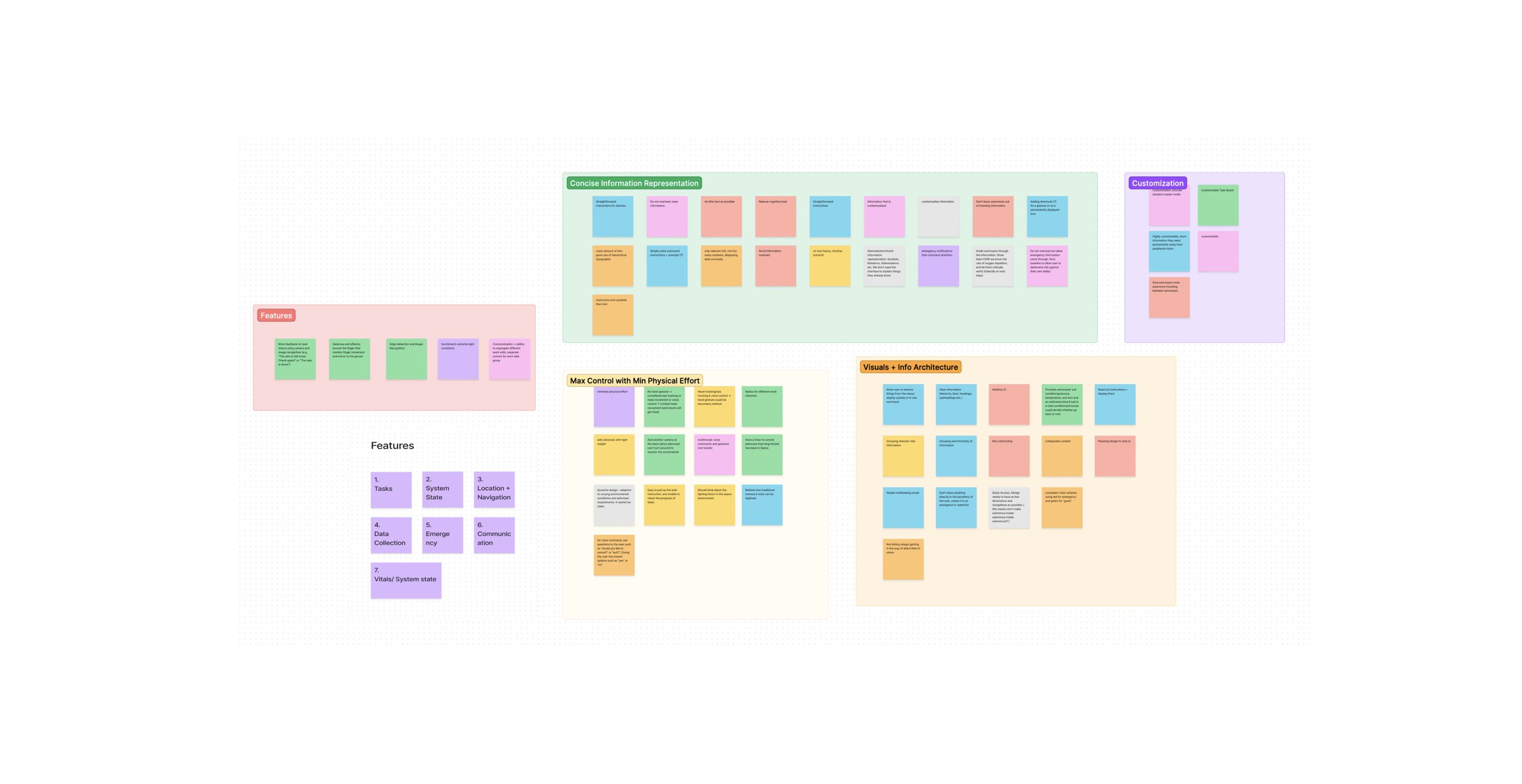

group whiteboarding and affinity mapping session post all the interviews to summarize and cluster our findings and identify core design tenets.

Core Design Tenets

This was a large scale complex project, and as the UX department, we would all break up into different agile subteams in the upcoming sprints working on different components of the overall interface . To ensure consistency across subteams, we established a few guidelines based on our research that should always be at the fore of our designs. It would keep us all aligned towards the primary objectives and become an aptly proverbial ‘North Star’ in case we get lost in the specifics of our own components.

Peripheral Design & space utilization

An EVA is the most high stakes situation for an astronaut. It’s a matter of life and death in an extremely hostile and foreign environment and unobstructed vision is absolutely crucial. Our AR interface must never, to the extent possible, cover the central field of vision (FOV). We must utilize peripheral screen space of the AR display headset effectively in a non-strenuous UI.

Dual interface interaction methods

The most conventional form of interaction with AR interfaces is usually by hand gestures. Based on our interviews, astronauts are strongly against gesturing because of the limited mobility in a spacesuit and exaggerated glove sizes which causes imprecision in pointing things out. We must utilize of a different method of interaction AND a failsafe incase of errors.

Concise information display on priority basis

AR devices can be uncomfortable and unfamiliar viewing experiences, especially so in space. Too much moving textual information is very disorienting and we must ideate more concise ways of information delivery. All data relevant to a screen must be prioritized to avoid overload and panic with textual information kept to a minimum.

Clarity over visual appeal

While it is tempting to allow visual design to be our differentiator from the other submissions, we have to remember the astronaut’s requirements. AR interfaces are tricky to operate, and large hit boxes, static color schemes, clear boundaries between calls to action and simple typography is what they need in a high pressure situation over aesthetic minimalism and modern designs. Ironically, as we propel into a new era of space technology, our designs must initially remain old-school!

Accessibility

While astronauts are required to pass the highest fitness requirements - including visual acuity, height, medical conditions, physical ability and mental capacity, we must be careful of potential space-specific accessibility concerns that we uncovered in our research. For example, hypoxia, suit malfunctions, constrained movements in zero gravity as well as visual strain in harsh high-contrast lighting conditions.

Intellect and Familiarity

Astronauts have a high capacity to learn novel processes and technologies. We can explore unconventional designs and layouts to meet the other design tenets under the safe assumption that astronauts will learn the ins and outs of the AR interface prior to actual deployment. However, in a tense situation, conventional iconography, secondary indicators and processes that people are familiar with can be the difference in making the right decisions in the right time.

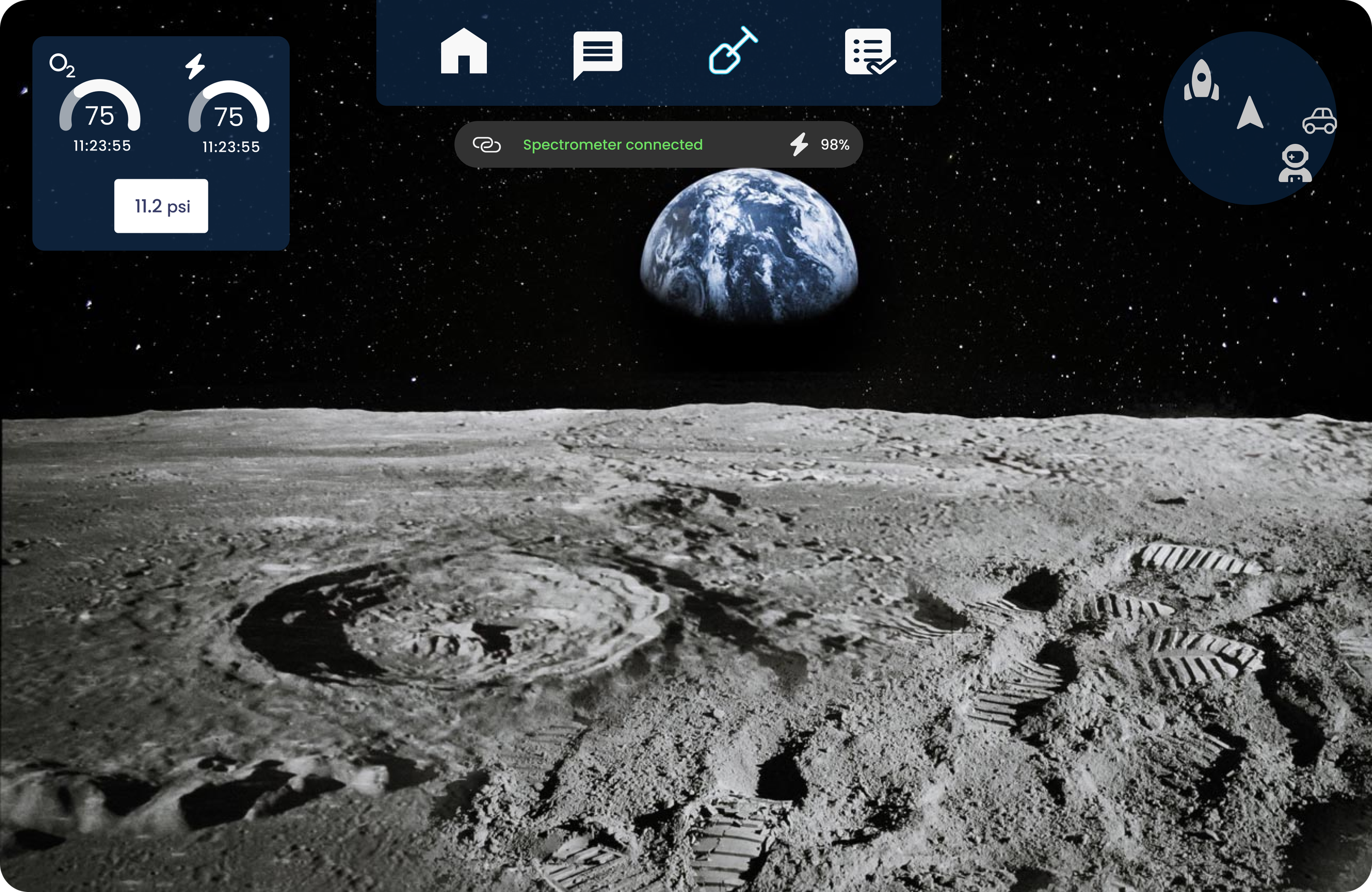

main/home state

We made lots of sketches to ideate the structure of the interface home screen and identify high priority elements. Secondly we had to decide on layering of screens, since AR platforms have the advantage of utilizing a third dimension of depth.

We went one step further in testing out our layouts by building curved display mockups for physical prototyping. Doing so gave us a better idea of depth and distances and allowed us to create ‘zones’ of comfortable vision where elements could be placed.

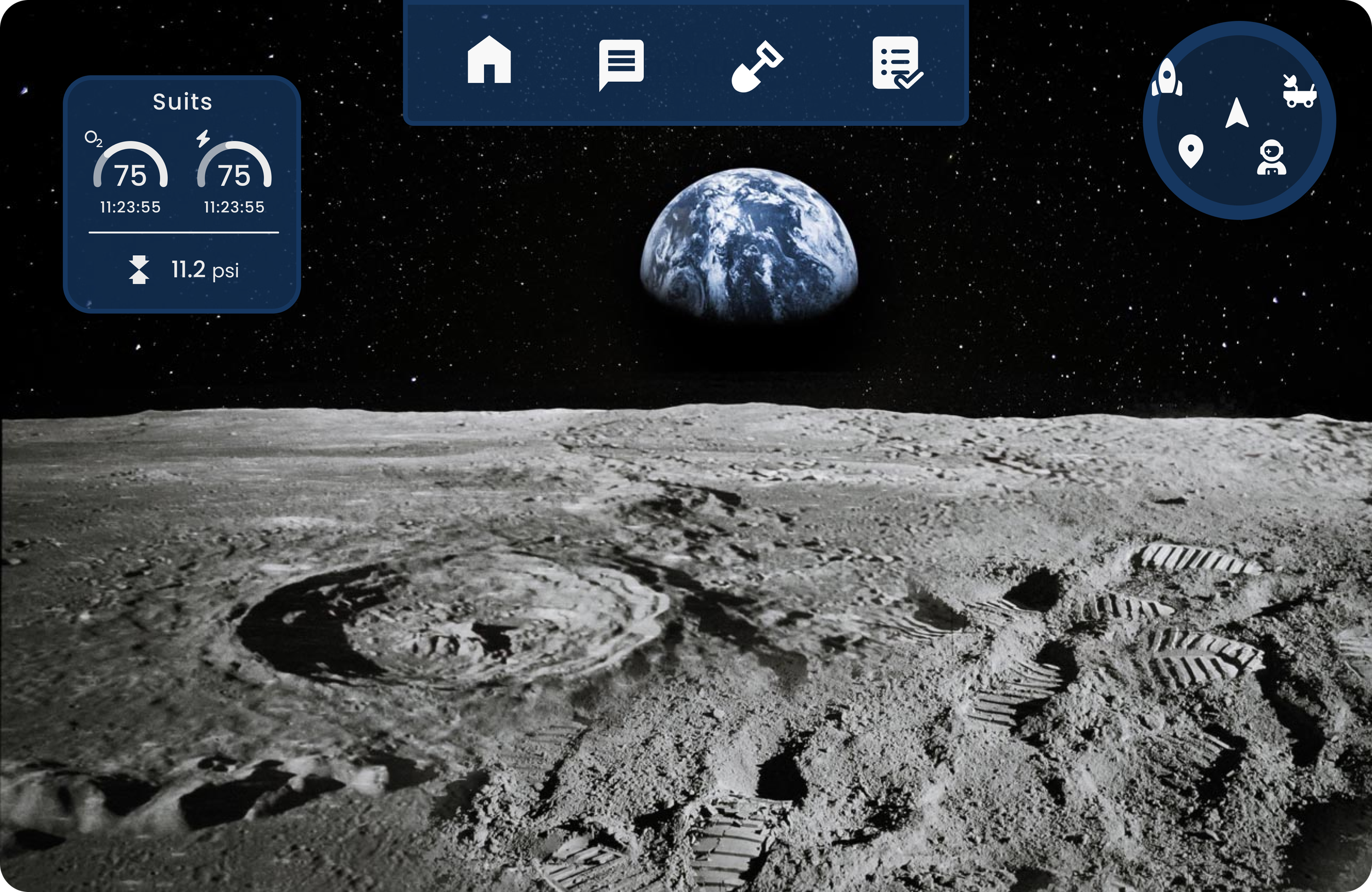

Representation of oxygen, pressure and suit battery (which are the three most important component an astronaut must always be aware of) with conventional iconography and visual cues. We display the percentage as a bold number that’s called out, a visual ‘progress curve’ of contextualizing that information as well as the estimated time remaining

After some repeated testing and back and forth with the development team, we proceeded with the following main state screen

We learned a lot about what direction we wanted to take our designs in from this Home Screen. After the development team made a working prototype of this main screen on the Microsoft HoloLens, we were able to quickly identify that eyeball-tracking was NOT a feasible method of interaction. There were too many instances of accidental triggering of buttons and it was difficult to differentiate between an intentional ‘blink’ towards a component and a general gaze in that direction. Head-tracking yielding far more optimal results and we were able to define minimum separations between items. We decided on voice commands as the secondary means of interaction. The use of iconography as an alternate means of conveying information panels was well received by our astronauts when we invited them for our first Human-In-The-Loop (HITL) test. We were also able to narrow down on a robust color scheme and style guide by testing our prototype in varying lighting conditions.

One of the most important decisions we were able to make using all that we learned from rapid initial prototyping was about how to structure our AR panels for some critical sequential functions that we identified. Specifically, we narrowed down on layering of panels over each other - something like popup screens - as opposed to side by side real world seeded panels.

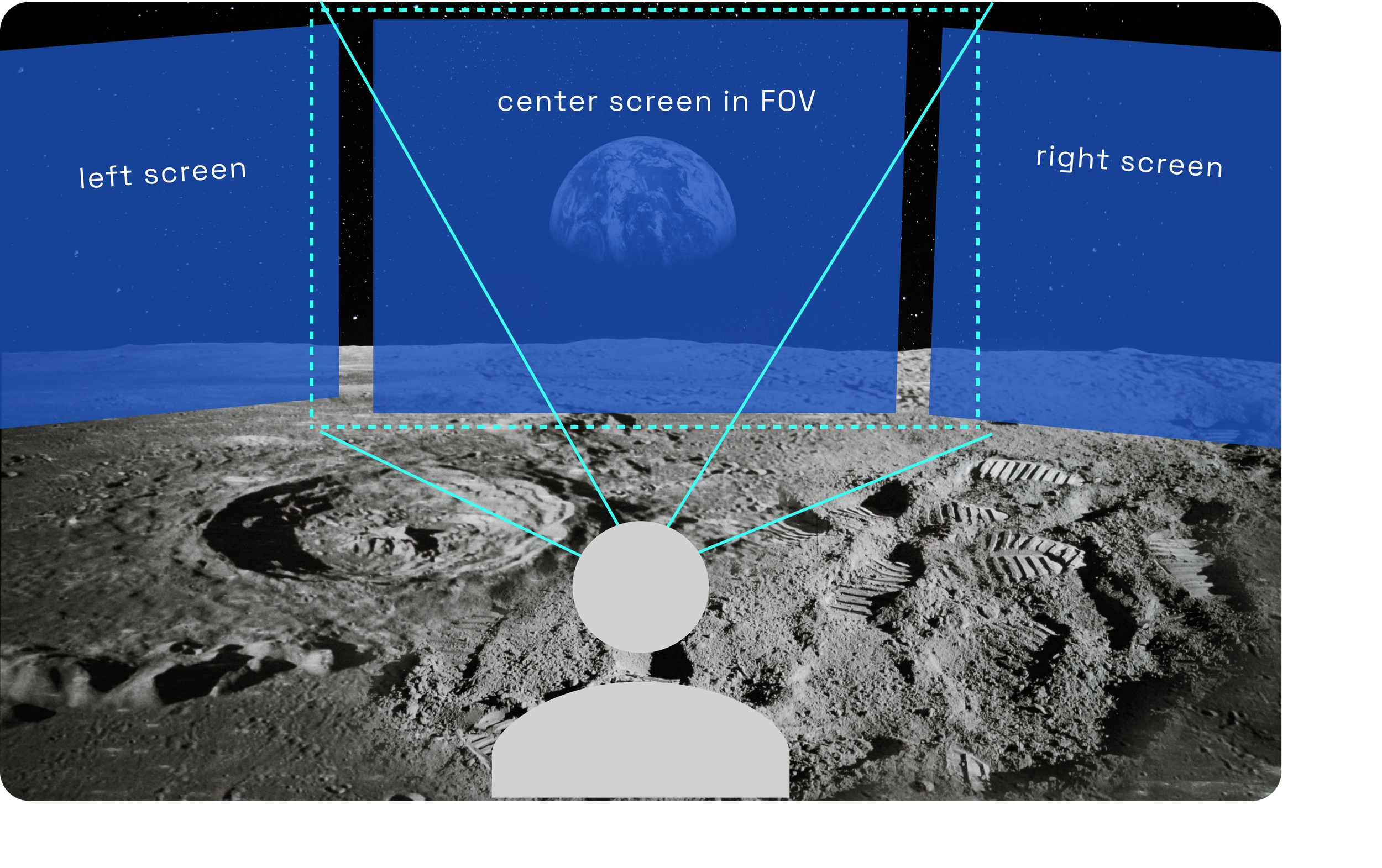

The above figures represent how a seeded panel is structured. Panels positions are fixed relative to the AR headset’s axis and only ‘move in’ to the field of view when the observer turns. By changing their direction of sight towards the panel’s fixed positions, they bring the existing left and right screens into the device’s current field of view.

Seeded panels are NOT comfortable viewing experiences, particularly for a head gaze system in a low stability environment like the moon.

Having to physically move (turn their heads) to access certain functions of the interface is cumbersome and diverts the astronaut’s attention from the task at hand. In dire emergency cases where physical mobility is a constraint, it may also not be possible for astronauts to turn their heads by the required amount to access core components.

In emergency or quick response situations, astronauts should not have to be thinking about which screen they’re currently on and where they might find the utility they’re looking for.

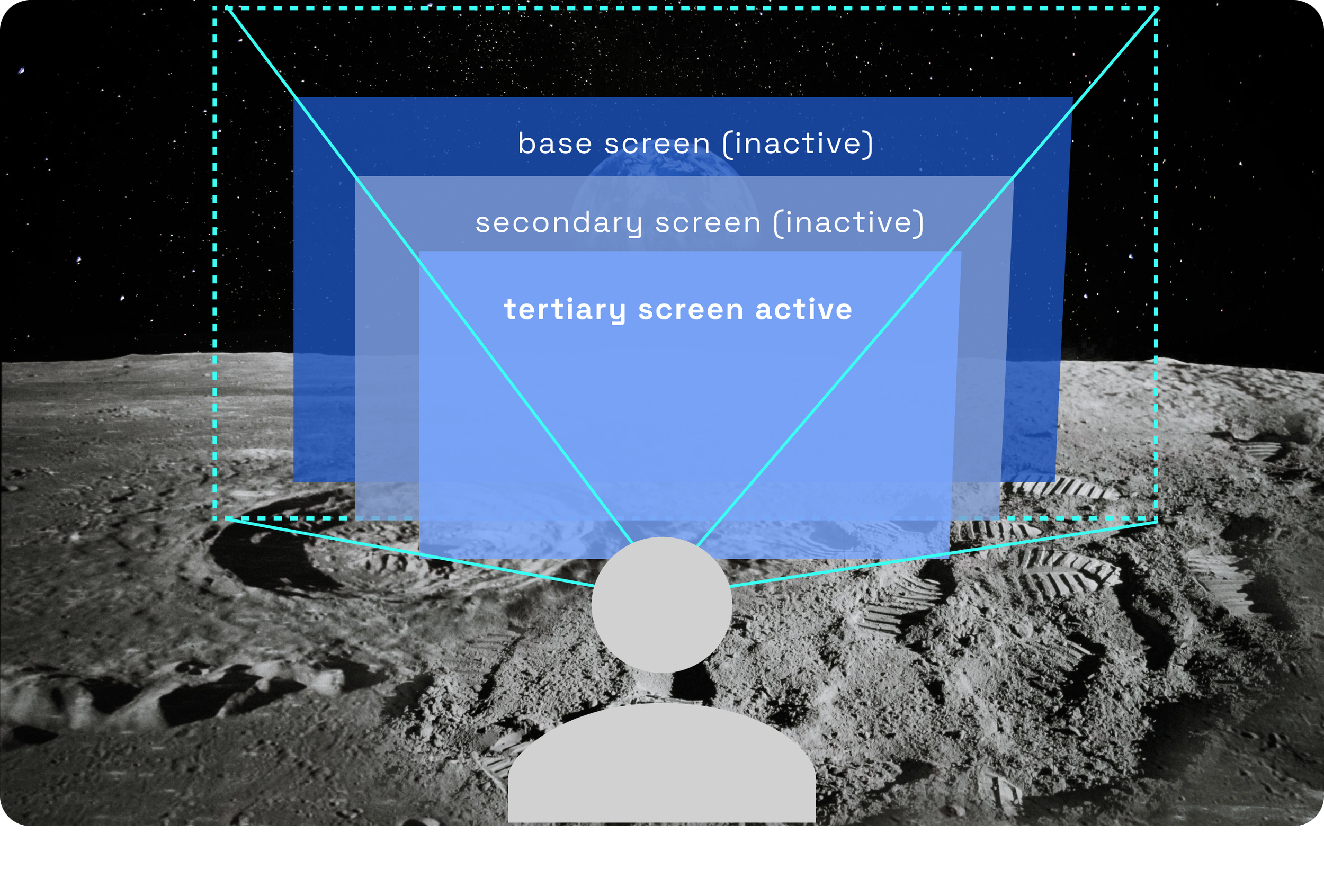

Compare this with a layered panel structure which is what we decided on

The above figures represent how a layered panel is structured. New or related screens are layered over the other when items are clicked. Each additional layer that pops up renders the hit boxes and potential actions from the previous layer inactive. Only elements from the foremost layer can be accessed and subsequent layers must be closed if elements from the preceding layers need to be used.

Layered panels have the potential to compose a far more usable interface, specific to the astronaut’s environment and constraints. We must, however, design them well in accordance with our established design tenets to make them effective.

Layered panels allow for a clear representation of information hierarchy and flow. With novel technologies in uncomfortable environments under high stakes circumstances, astronauts should be able to have a mental map of how they got from one utility to another. If implemented in a non-obtrusive way with the right blurring and opacity, some critical sequential operations can be represented this way.

It allows for the creation of a ‘home’ or base screen which can be the starting point of interface navigation. This is in line with conventional digital user experiences and gives us the ability to categorize utilities into groupings with logical hierarchies and prioritize them on the display.

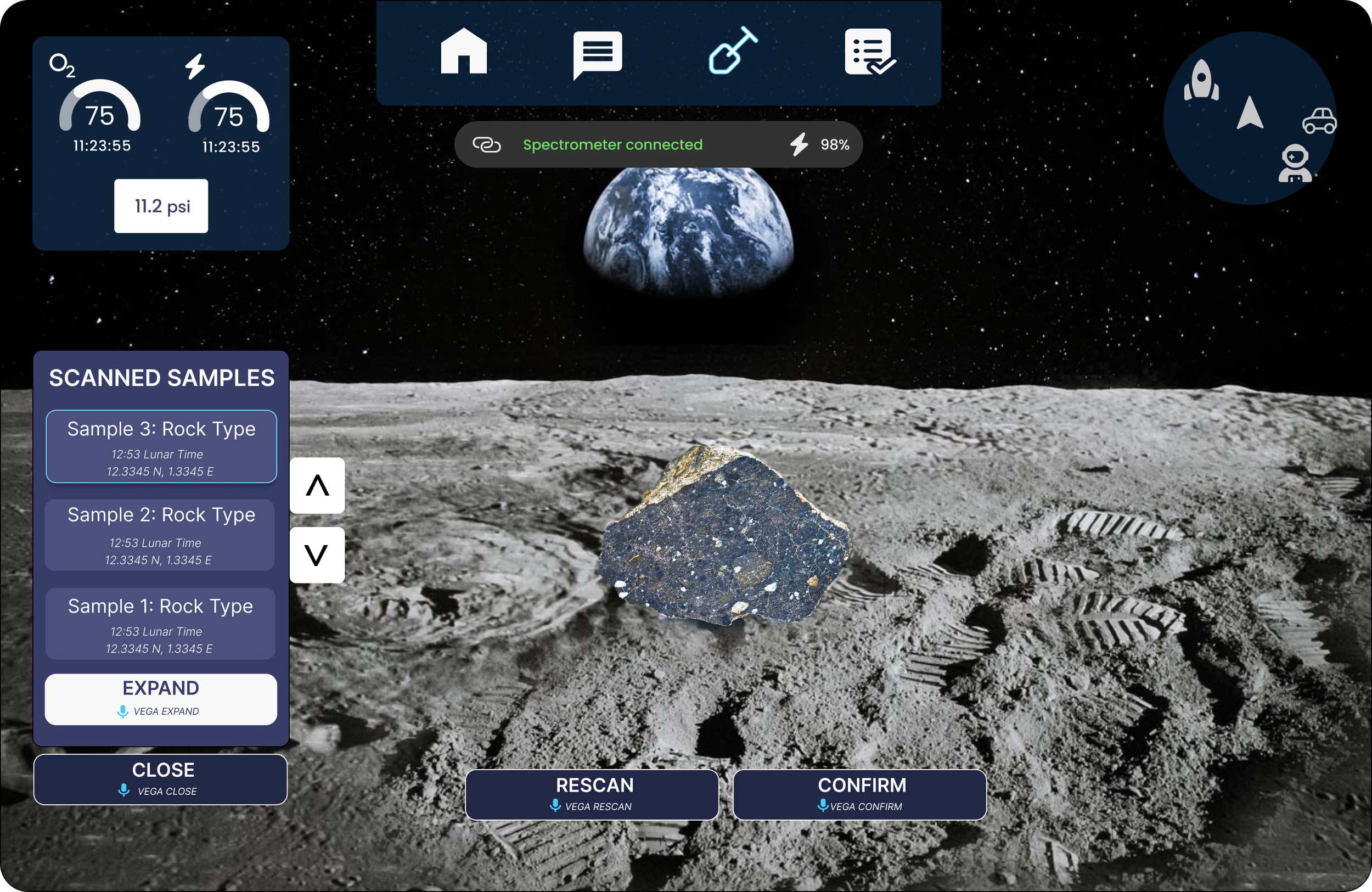

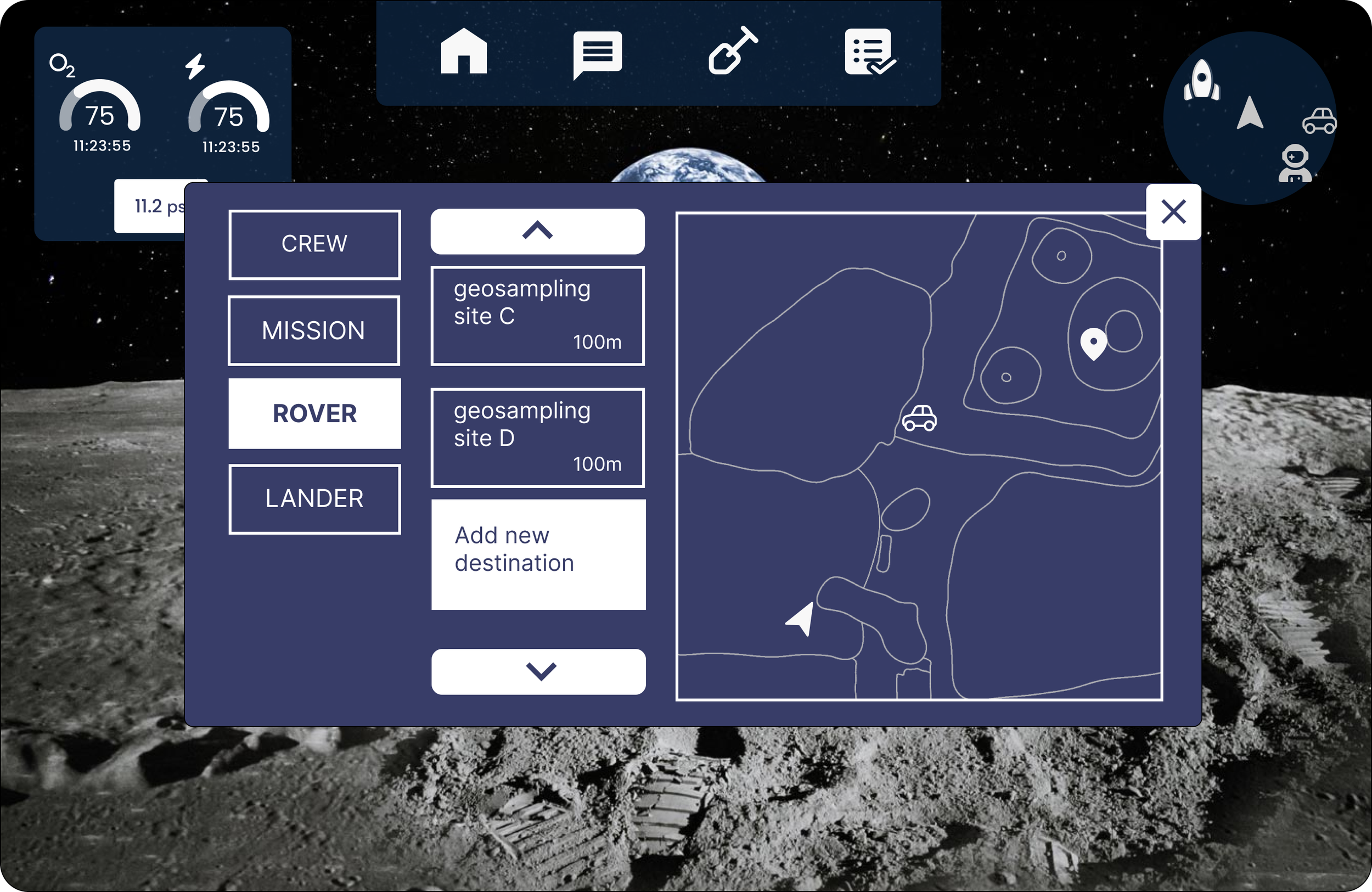

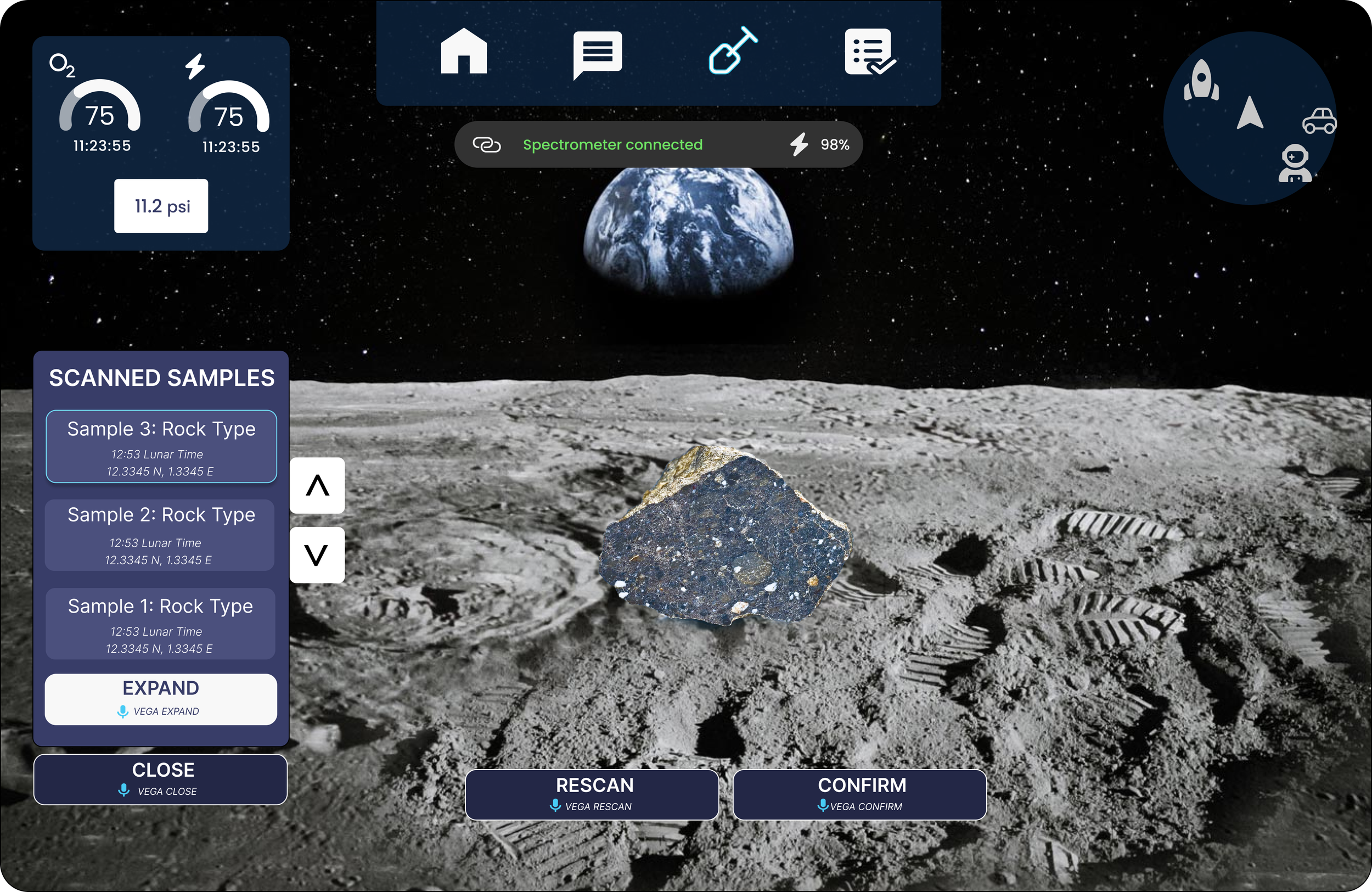

Some examples of how we used this structure from the rover, messaging and geosampling components are below

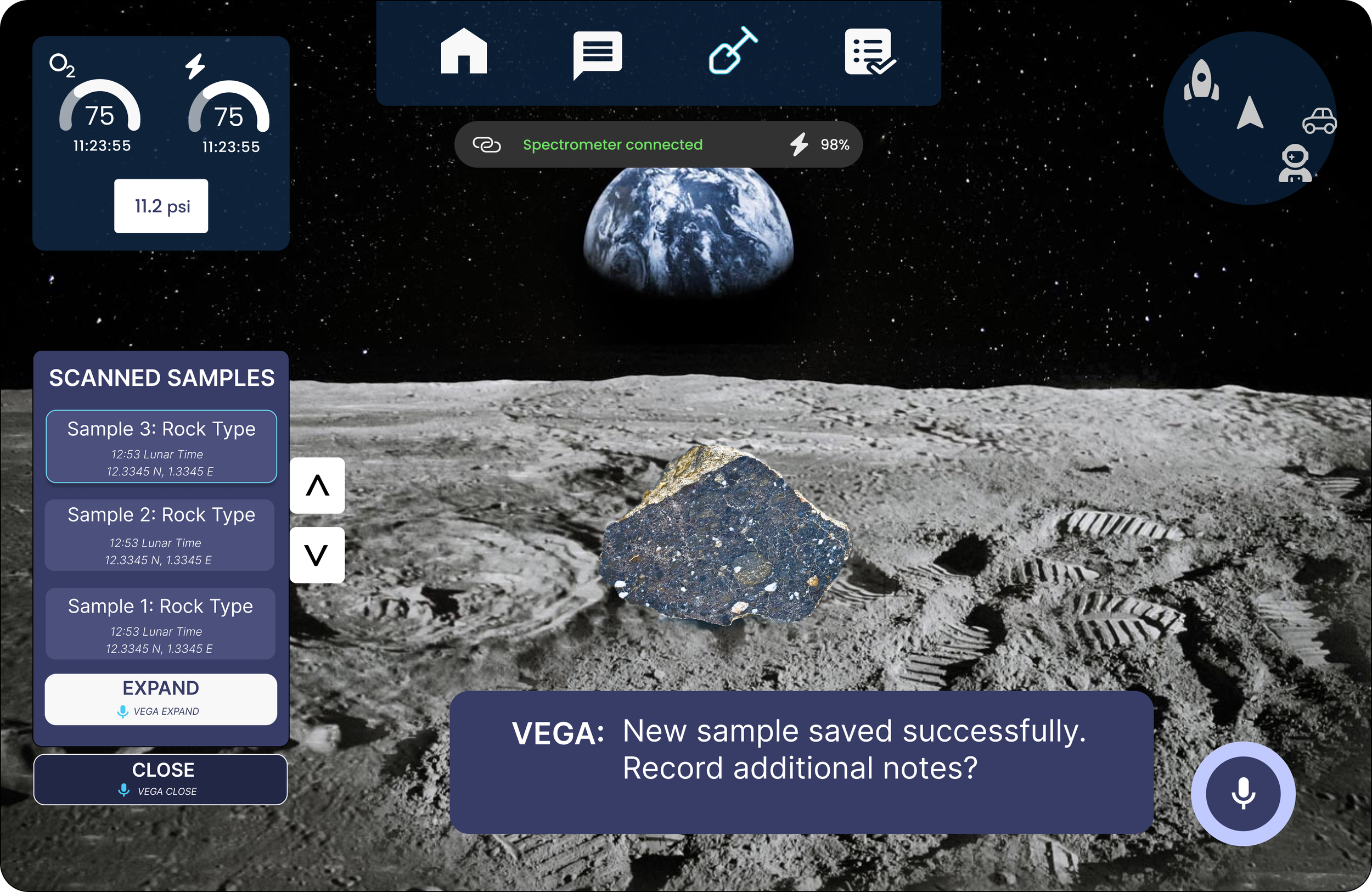

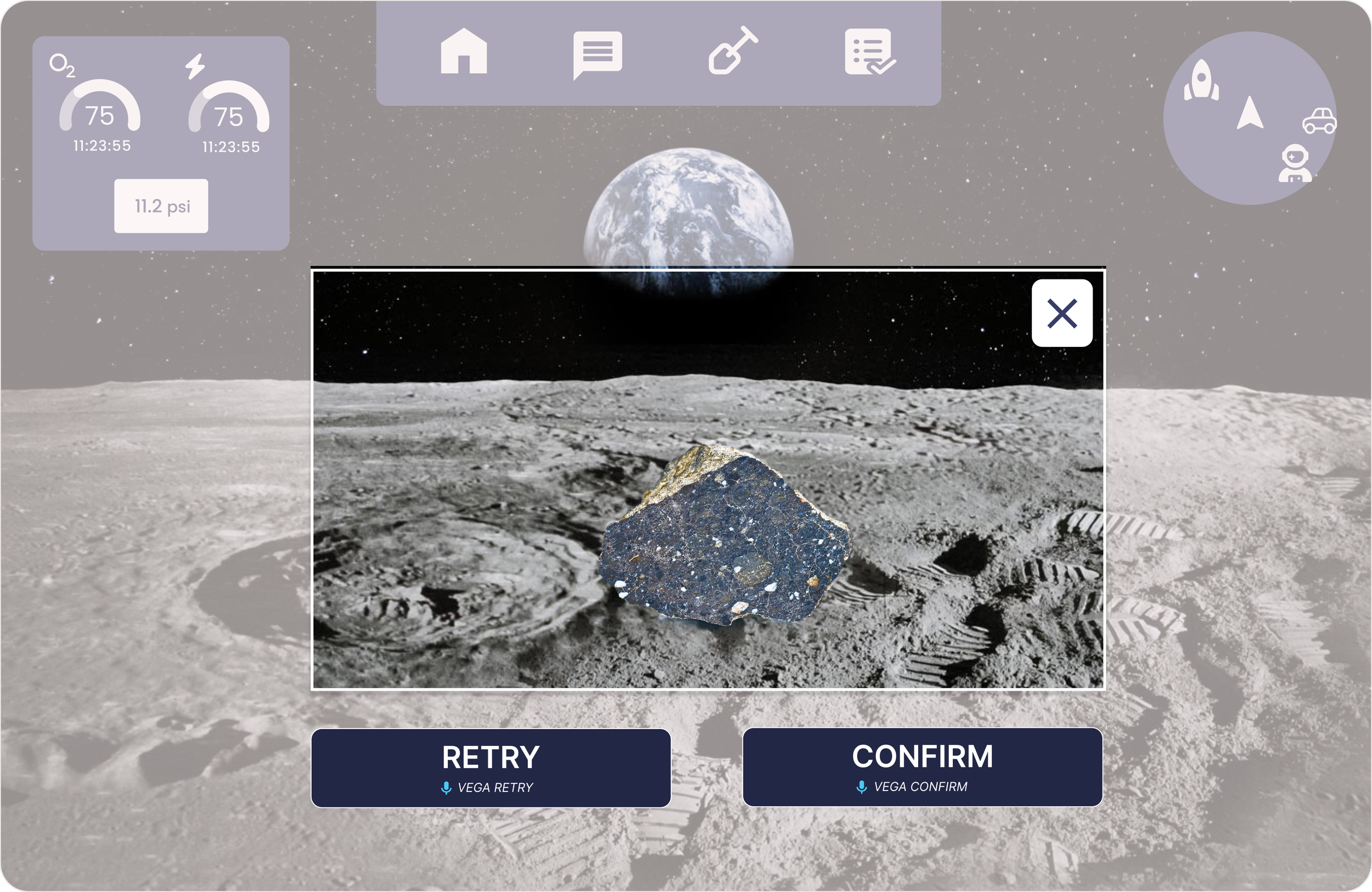

We will see this screen in detail in the geosampling section, but here the geosampling frames are layered over the home screen without overlap. The contrast helps in differentiation and the Home Screen hit zones are inactive

Here, the rover screens share some overlap with the Home Screen. This was unavoidable, since the terrain map must be large and there are quite a few logical actions that need to be performed. For such a screen, the contrast helps differentiate between different layers, and the Home Screen elements are all inactive till the rover screen is closed.

geosampling

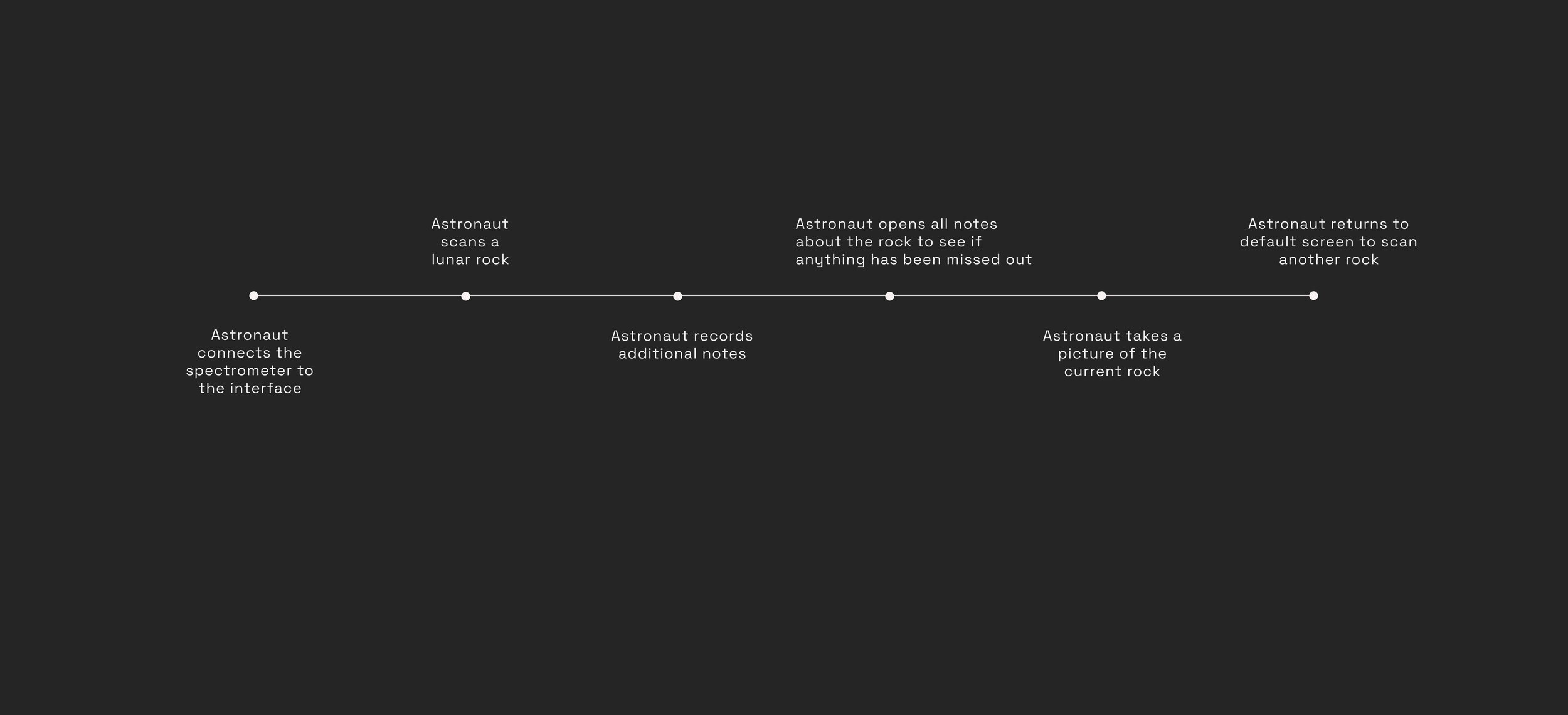

In a geosampling EVA, the astronaut is required to scan lunar rock samples using an external connected device called the spectrometer. The spectrometer scans the rock for basic information like chemical composition, size etc following which the astronaut can add additional observations into the ‘file’ created for that particular rock. I was a part of the agile subteam working on this particular component along with two of my UX department teammates, and a few members from the development department.

Based on our interviews and project specification lit review, we first created the various user journeys and scenarios that an astronaut might encounter on a typical geosampling mission.

Minimap that makes use of iconography to orient the astronaut on the most important locations - the home base, the rover (if on a rover mission), the target location (if it was preset) and the next closest astronaut. Distances are contextualized by the placing of the icons on the minimal - locations further away from the astronaut are places at the periphery and closer locations are towards the astronaut’s marker

Once we had identified every screen required and the information to be delivered at each instance, we then proceeded with designing. After multiple iterations, we arrived at final designs.

Here is an example of one such flow with the following steps.

Video Playback

Here’s what the above geosampling flow looks like in our final design. There’s a lot of interesting elements in each screen to unpack and discuss.

Screen-by-screen breakdown

The spectrometer connection status is displayed at the top using familiar design standards. The astronaut can now scan a rock using the attached device

Once a rock has been scanned by the spectrometer, the scanned samples screen pops up with the current scan highlighted. The different color scheme is to provide another visual cue about this section being on a different layer. The Home Screen layer cannot be triggered till the geosampling kit is closed. This is to avoid accidental ‘clicks’ and overload when multiple elements are layered over each other.

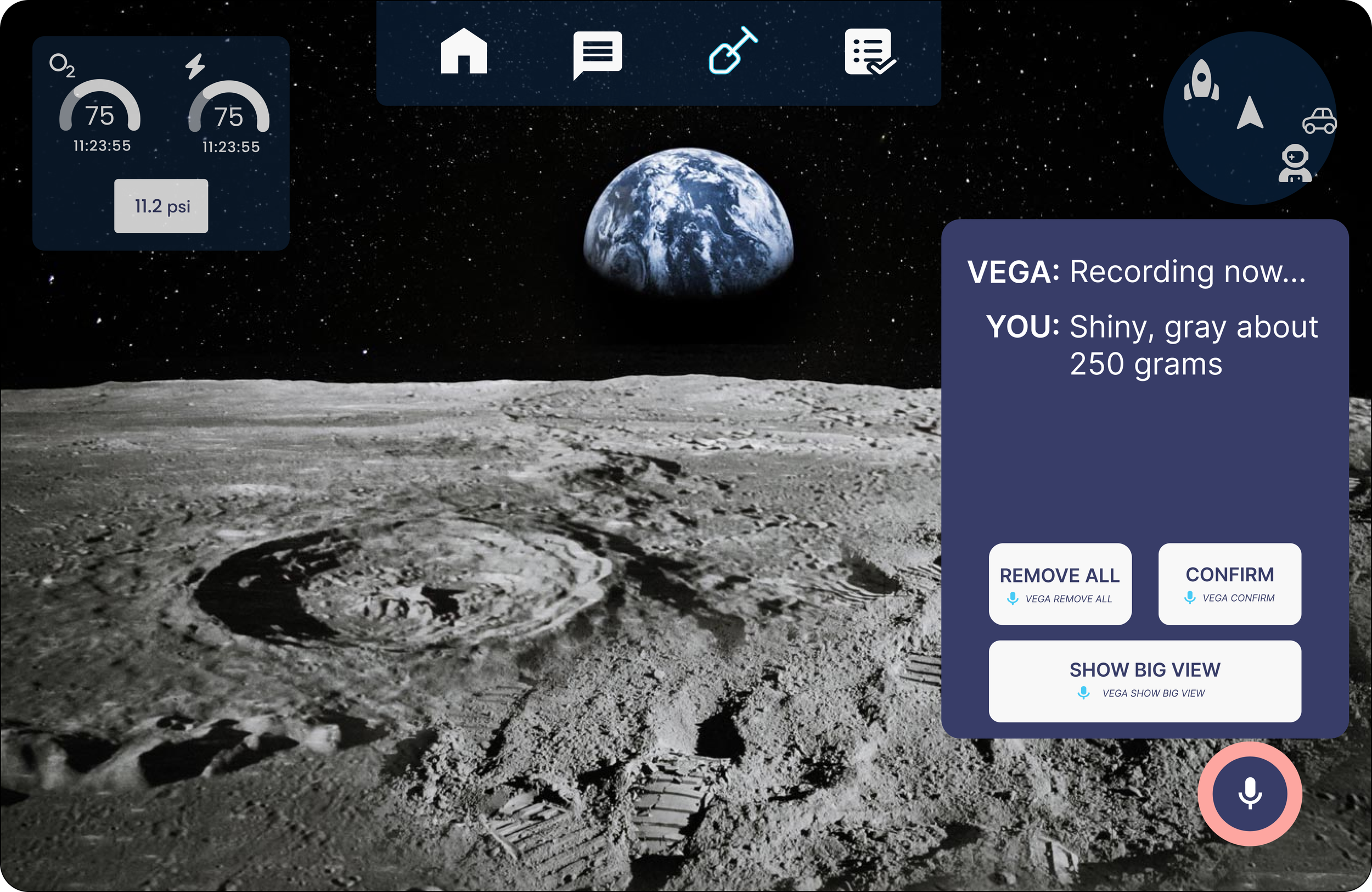

Each actionable/clickable item is distinctly called out in contrasted boxes. These include the scrollers to view the list of scanned samples, and of course, the expand, close, rescan and confirm buttons (which we’ll call standard interactions). Sticking to our design tenet, we’ve created dual interaction methods - head gaze which is the primary means, and voice command which proves to be extremely useful in geosampling. Below the standard interaction buttons we have a mic icon, indicating a voice enabled command, with the phrase that needs to be said to trigger that option. This allows astronauts to proceed to the next most logical steps ( hence called standard interactions ) that would follow from this screen while still observing the rock and the surroundings.

On choosing confirm on the previous screen, VEGA - our voice assistant - notifies the astronaut that the sample has been recorded. (choosing ‘rescan’ would have just allowed you to scan again, and ‘close’ would have closed the geosampling layer. We will see the ‘expand’ screen later).

VEGA now asks if the astronaut wants to record additional notes. The captioning and ring highlight are secondary indicators.

The ‘show big view’ screen is a curious outlier to the general design tenets we established. It is the only screen in the geosampling layer that covers the central FOV with marked opaqueness and significant text. Multiple designs were explored, but the only way to adequately display significant chunks of uncategorical user recorded text is unsurprisingly - through text. This is also the case for the ‘messaging’ functionality where user’s texts need to be displayed.

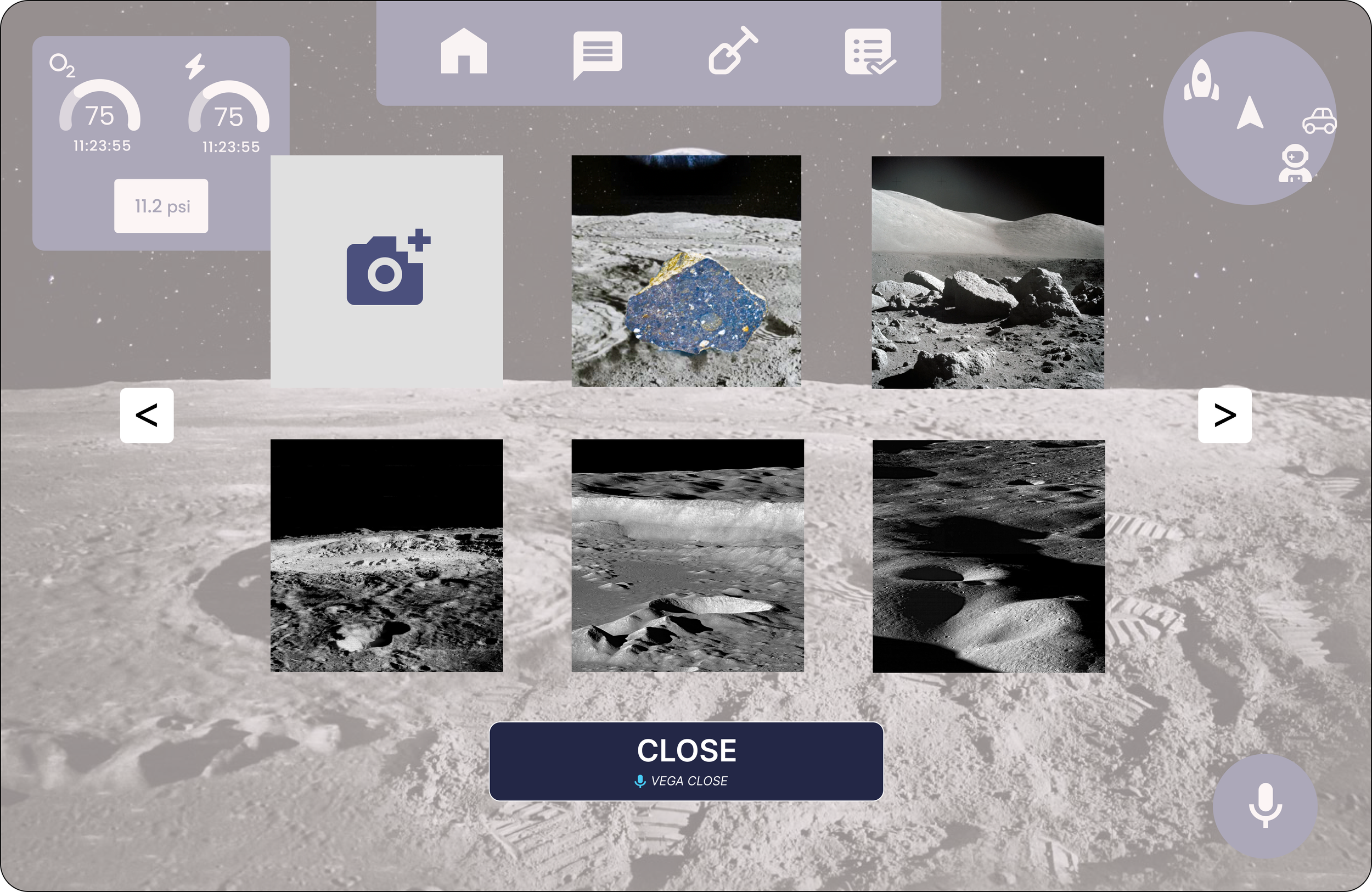

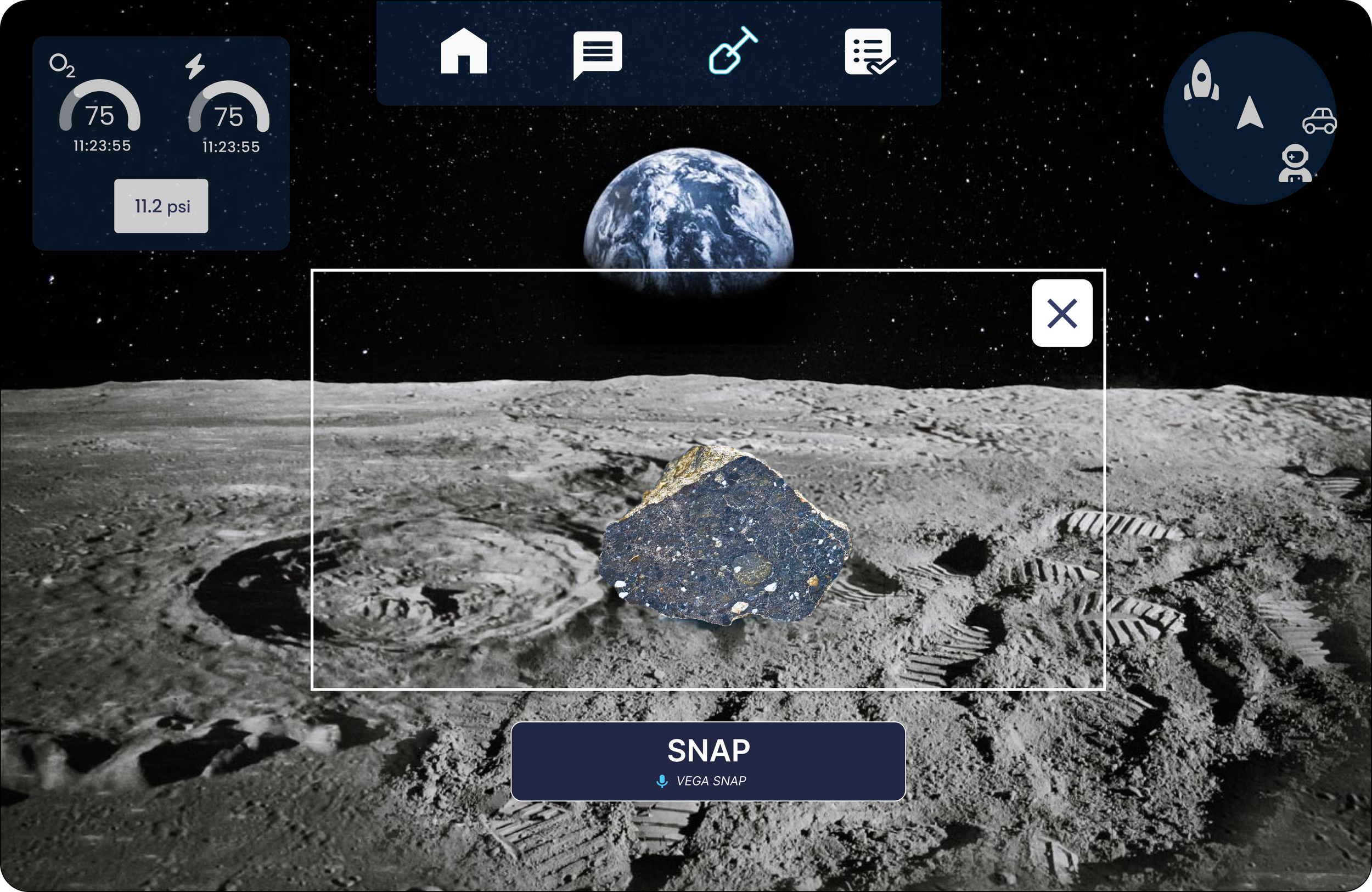

On opening ‘gallery’, the astronaut can access the photo log and activate the camera by clicking the top left box. While this screen is more centrally structured in the FOV, comfortable spacing and transparency makes it less distracting.

The camera is very similar to the way a mobile camera interface behaves, with borders establishing the capture area.

Gallery screen with the snapped picture highlighted.

We kept few and simple calls to action to maintain the feasibility of dual interactions and build a convenient repetitive process. For example, we could have made each sample its own button like mobile apps instead of having to scroll to each one and select ‘expand’ but that complicates the voice command system since the phrases would have to be unique.

The astronaut calls out their note - shiny, grey about 250 grams. The interface displays what was recorded so the astronaut can verify it and ‘remove ’ in case of errors. If the note is too big to be displayed, or if the astronaut wants to view all notes, the ‘show big view’ is triggered. The command is slightly unconventional (we could have simply gone with ‘expand’), but since expand is already a command phrase we did not want it to be utilized for two different, but related actions.

The change to red ring highlight indicates that the system is recording user input.

The ‘minimize’ button is smaller because once triggered, it is difficult to return back to this screen than it is from the other two options. Thus, we want to reduce the possibility of accidentally triggering it. It is also an attempt at bringing back familiarity, where the closing functions are small and at the corners. The top left, which is the standard close location, is more central in the field of view than the bottom right and has a higher chance of ‘lining up’ with the horizon which is often where astronauts will gaze for balance.

Other ‘throwbacks’ to previous eras of design are apparent, done in the interest of clarity. Scroll buttons are consistent across screens for convenient usability instead of a modern scroll bar, and large, clearly demarcated minus buttons instead of the more seamless cross icon to delete a note. In an AR interface, particularly in limited gravity where precision movements are difficult, hit boxes need to be big and visible so that astronauts can easily trigger their intended outcomes.

Picture confirmation screen.

Big outcomes are the result of tiny critical decisions

Transferability of approach

Good teamwork precedes good design processes

It was only when an experienced NASA astronaut with over 100 days in the ISS remarked on our design as “one of the most elegant and structured designs that, feels like it was truly built the way we would want it” that we realized the true impact of all our months of effort. One of the things we had remarked on as a team was just how much time we spent on making the smallest decisions. We spent days deliberating on the height of the close button’s ‘X’; weeks going back and forth on the spacing between two elements, and a whole two months to finalize our color palette - but it was a process that we all saw the value of and it was time each one of us was willing to dedicate. I mean, we were designing for astronauts who would be on the moon and the consequences of even slightly suboptimal design could be grave! When safety is the primary need, the smallest decisions must add up to create a seamless and proactive design. There is less room for experimentation and creativity, and every design decision must have a well researched motive. When you consider the seemingly superficial and challenge the suitability of convention, that’s when you begin to create a product that’s truly centered around the user’s every need.

While fully AR supported space missions are at least two years away, with UX for space systems still being a relatively novel and niche field, the same high stakes consequences are relevant to a field of design with a much more general audience - automotive UX. The research methodologies we ideated could also be applied to an automotive project, with similar insights and inferences that might be drawn from it. It got us thinking about the extended applications of AR in the automobile industry, and the opportunity for us to challenge ourselves as a group and work on something in this field of design in the next academic year.

Despite being a 10 membered UX team, we became a close knit unit quite easily. We created a great working atmosphere, recognizing and respecting the cultural and academic diversity within our group and this wonderful dynamic only made our designs that much more holistic. Each component was designed taking into account everybody’s perspectives, and each work meeting was always followed by interesting conversation. We could work long arduous hours without much exhaustion, share our honest opinions without fearing backlash and let our creativities flow unrestrained; by the end of the 10 month period, we had created a product made from the culmination of every designer’s very best effort.